A Step-by-Step Guide for Deploying LLMs with MindsDB and OpenAI

Mastering LLM Deployments with MindsDB and OpenAI

Introduction

A large language model (LLM) is a machine learning model trained on vast amounts of data and can perform natural language processing (NLP) tasks such as analyzing and summarizing text, interpreting languages, answering questions based on sentiments, text generation, and more.

The model generates coherent and contextually relevant human-like responses based on the data it was trained on and the input it receives.

MindsDB allows you to create models that utilize the OpenAI GPT-4 features and deploy them so that you are ready to perform NLP tasks within your database.

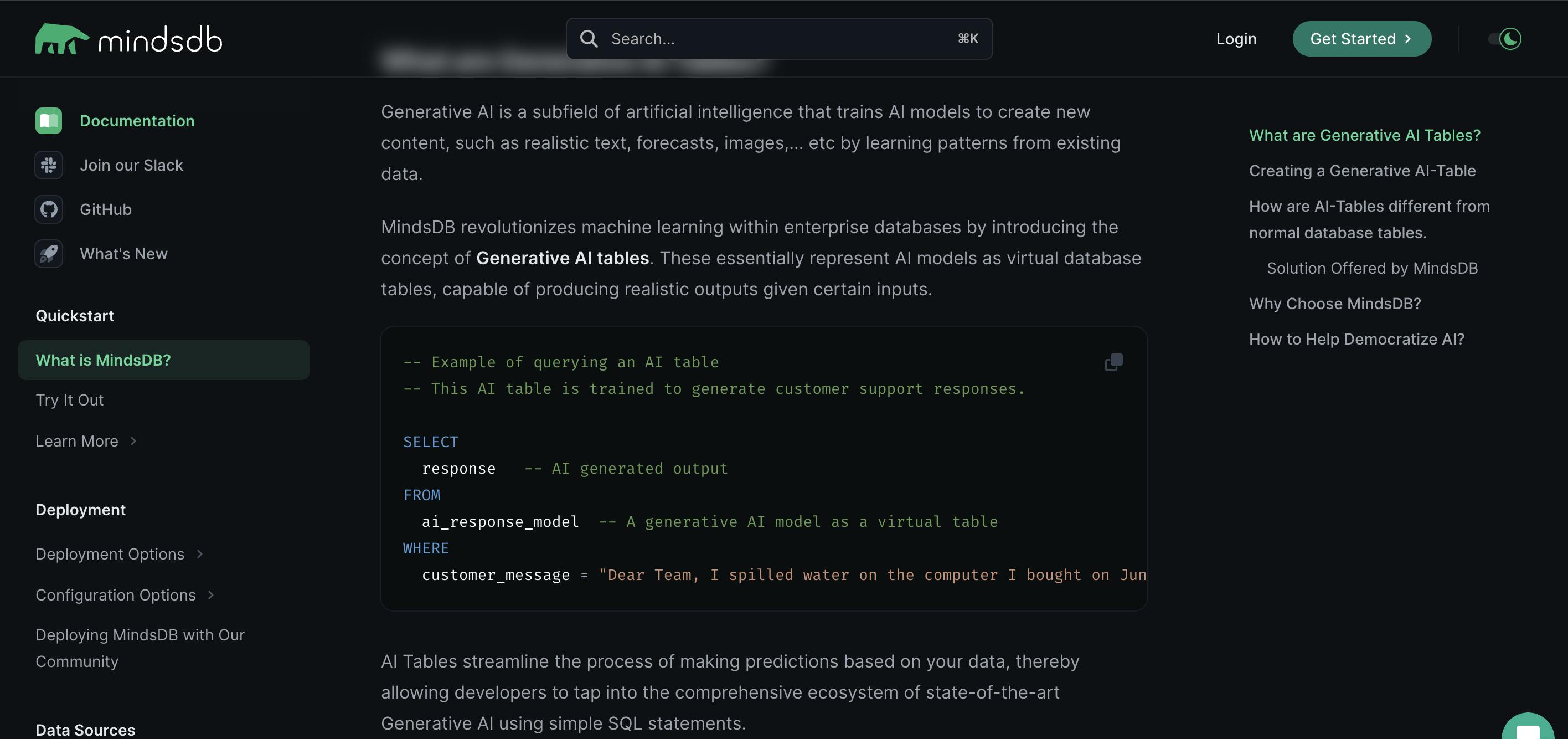

The MindsDB Generative AI Tables concept makes this possible.

AI Tables refer to machine learning models stored within a database as virtual tables.

In this article, you will learn how to deploy LLMs with MindsDB and OpenAI.

We will leverage the OpenAI engine made available by MindsDB to create and deploy models that can rephrase text, determine word count, and analyze & respond to customers’ reviews.

Additionally, MindsDB allows you to fine-tune some LLMs with specialized or task-specific datasets to improve their performance and applicability to specific tasks.

Pre-Requisites

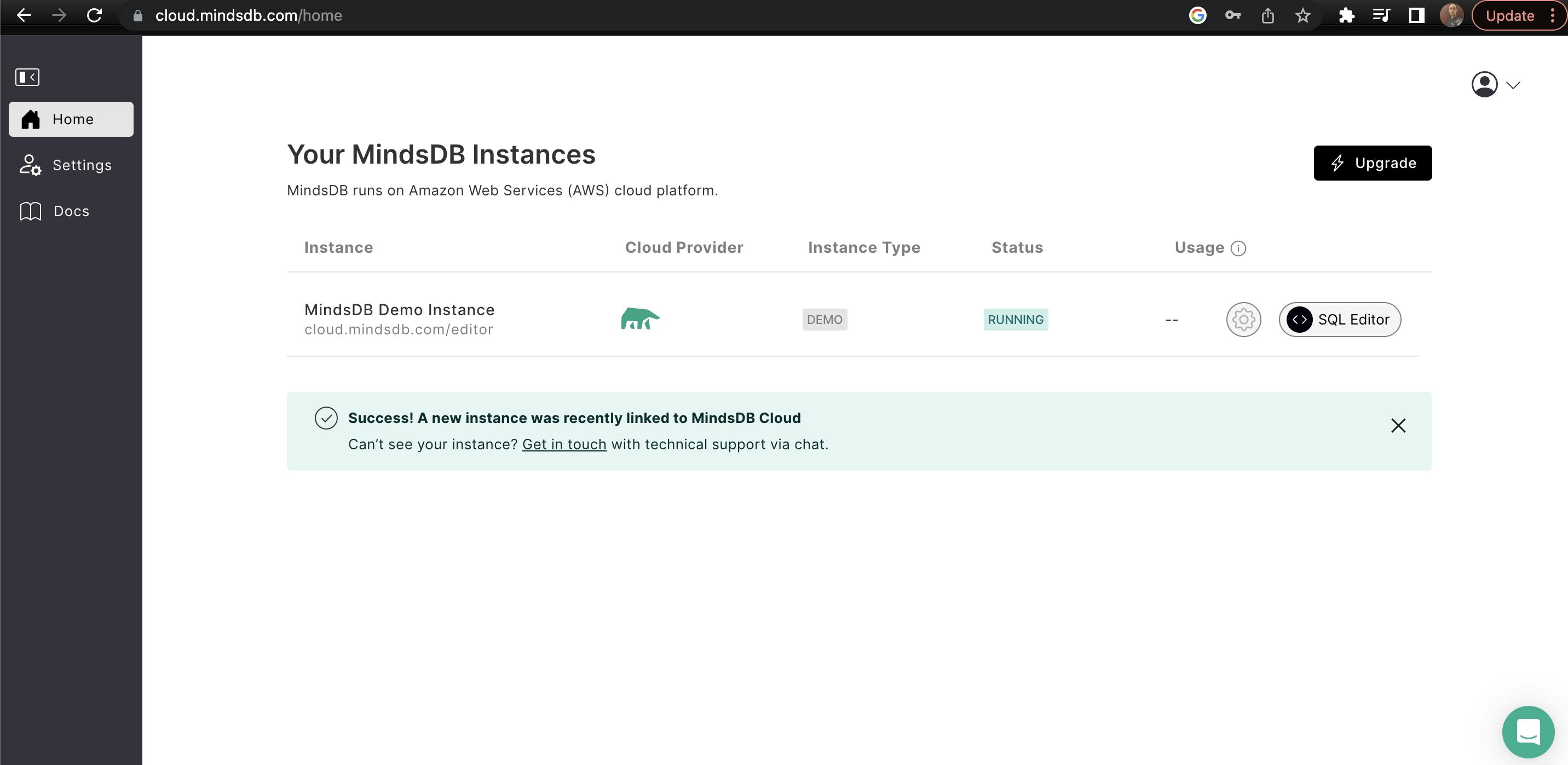

To follow along with this tutorial, you need a MindsDB cloud account.

If you prefer a local instance, set up MindsDB via Docker or pip.

Connecting to a Database

Let’s start by connecting MindsDB to a public MySQL demo database.

We will use a few tables inside the MySQL public database to demonstrate how to deploy LLMs.

CREATE DATABASE project_llm

WITH ENGINE = 'mysql',

PARAMETERS = {

"user": "user",

"password": "MindsDBUser123!",

"host": "db-demo-data.cwoyhfn6bzs0.us-east-1.rds.amazonaws.com",

"port": "3306",

"database": "public"

};

We have successfully connected our database to MindsDB with the name project_llm.

Let's take a look at its tables.

Run the following query to see the tables:

SHOW FULL TABLES FROM project_llm;

Rephrasing Text

Recall we mentioned that for this tutorial, we will create and deploy 3 different models to rephrase text, determine word count, and analyze & respond to customers’ reviews.

In this section, we will create a model for rephrasing text. We will call it the rephraser_model. This model will rephrase email content more politely.

CREATE MODEL rephraser_model

PREDICT paraphrase

USING

engine = 'openai',

prompt_template = 'rephrase content in a more polite way. {{email_content}}'

api_key = 'YOUR_OPENAI_API_KEY';

The Model uses the OpenAI engine. prompt_template stores a message to be answered by the model.

If you are on the MindsDB cloud, the api_key parameter is optional but mandatory for local usage and MindsDB pro.

For convenience and to avoid repetition, you can create a MindsDB ML engine to hold your API key. This way, you don’t have to enter the API key each time you want to create a model.

CREATE ML_ENGINE openai2

FROM openai

USING

api_key = 'YOUR_OPENAI_API_KEY';

Check the status of your model. You can only use a model after it has completed generating (this process does not take time to complete).

DESCRIBE rephraser_model;

Now that our Model is ready. Let’s ask it to rephrase some texts for us.

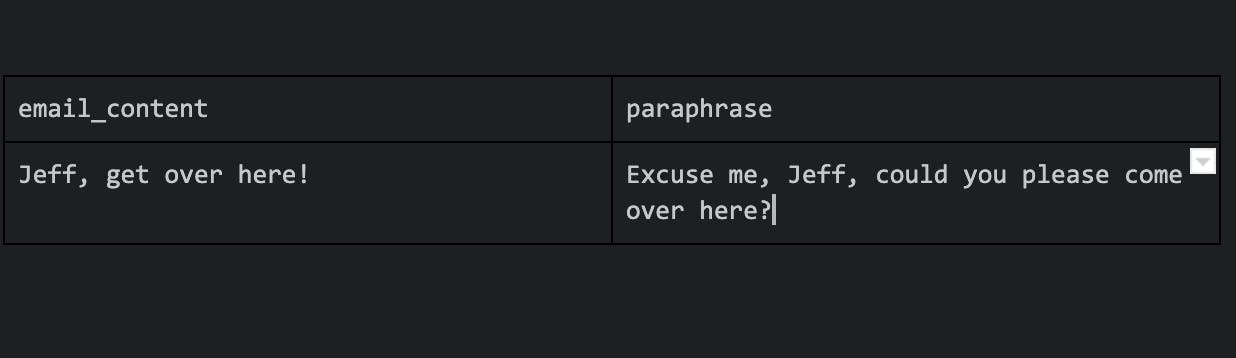

SELECT email_content, paraphrase

FROM rephraser_model

WHERE email_content = "Jeff, get over here!";

Output:

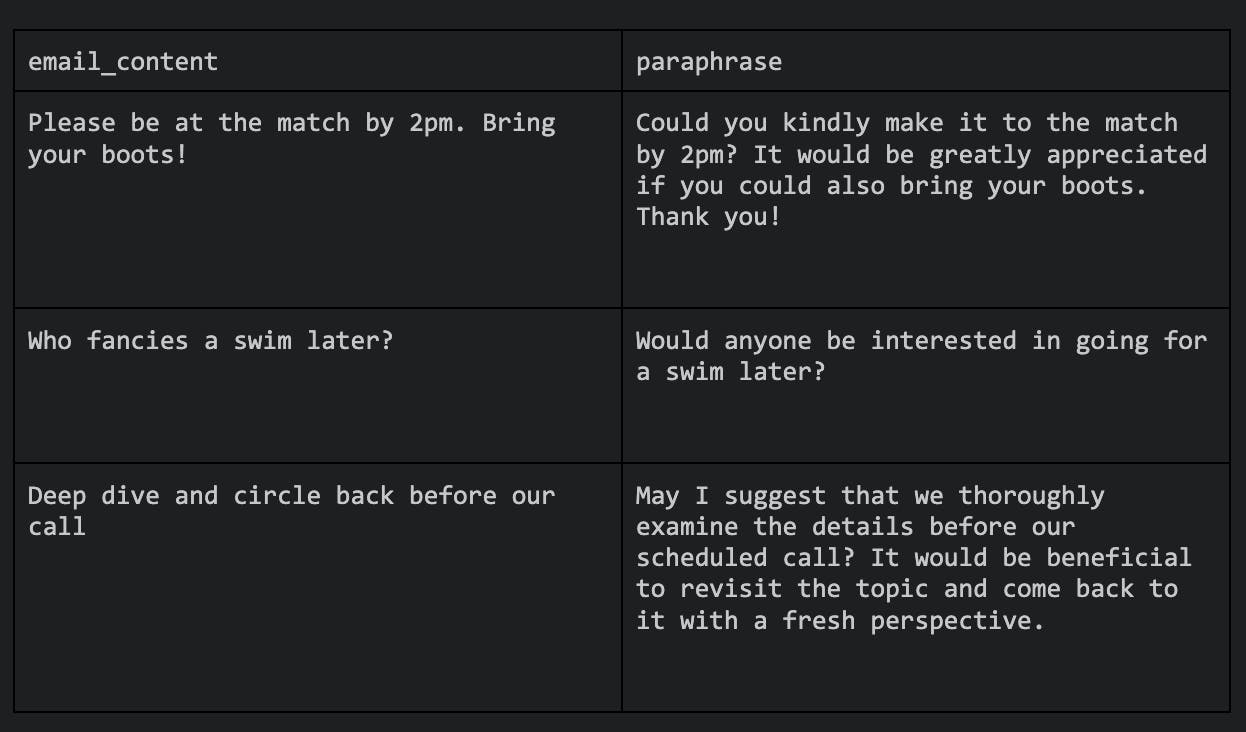

Let’s do a batch prediction by joining our rephraser_model to the email table and rephrase some email content more politely.

SELECT input.email_content, output.paraphrase

FROM project_llm.emails AS input

JOIN rephraser_model AS output

LIMIT 3;

Output:

Determining Word Count

Following the same process, let’s create and deploy a model to determine the word count of comments.

Create a model

CREATE MODEL word_count_model

PREDICT count

USING

engine = 'openai2',

prompt_template = 'describe the word count of the comments strictly as "2 words", "3 words" "4 words", and so on.

"this is great" : 3 words

"I want this good" : 4 words

"I want bread right now" : 5 words

"please take note and effect changes": 6 words

"{{comment}}.":';

Optionally, you can add the max_tokens and temperature parameters to USING. max_tokens defines the maximum token of the prediction. temperature defines the riskiness of the answer provided by the model.

Check the status of your model, and then go ahead to test it out.

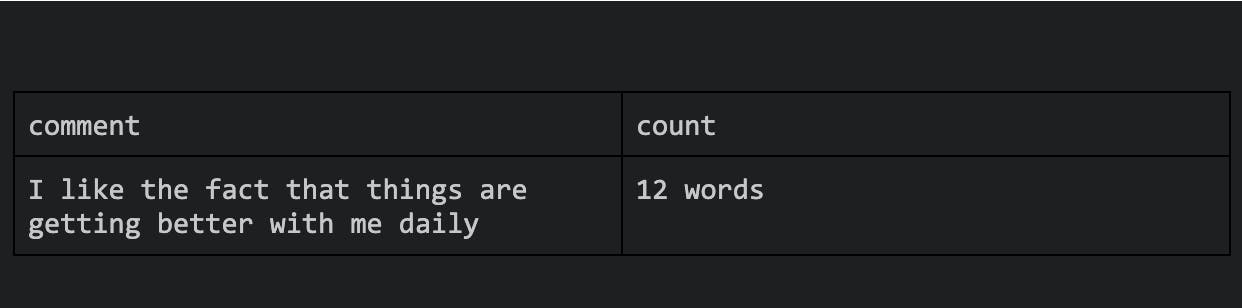

SELECT comment, count

FROM word_count_model

WHERE comment = 'I like the fact that things are getting better with me daily';

Output:

Let’s do a batch prediction by joining our model to the user_comments table.

SELECT input.comment, output.count

FROM project_llm.user_comments AS input

JOIN word_count_model AS output

LIMIT 3;

Output:

Analyze and Respond to Customers’ Reviews

Let's create a model to analyze and respond to customers' reviews.

The model will first analyze and determine if a review is positive or negative.

If negative, the model responds with an apology and promises to make their experience better next time.

If positive, it will respond with a thank you note and ask them for a referral.

CREATE MODEL auto_response_model

PREDICT response

USING

engine = 'openai',

prompt_template = 'Give a one sentence response to customers based on the review type.

"I love this product": "positive"

"It is not okay" : "negative"

If the review is negative, respond with an apology and promise to make it better.

If the review is positive, respond with a thank you and ask them to refer us to their friends.

{{review}}';

Let’s query the model with some synthetic data.

SELECT review, response

FROM auto_response_model

WHERE review = "I am not happy about this product";

Output:

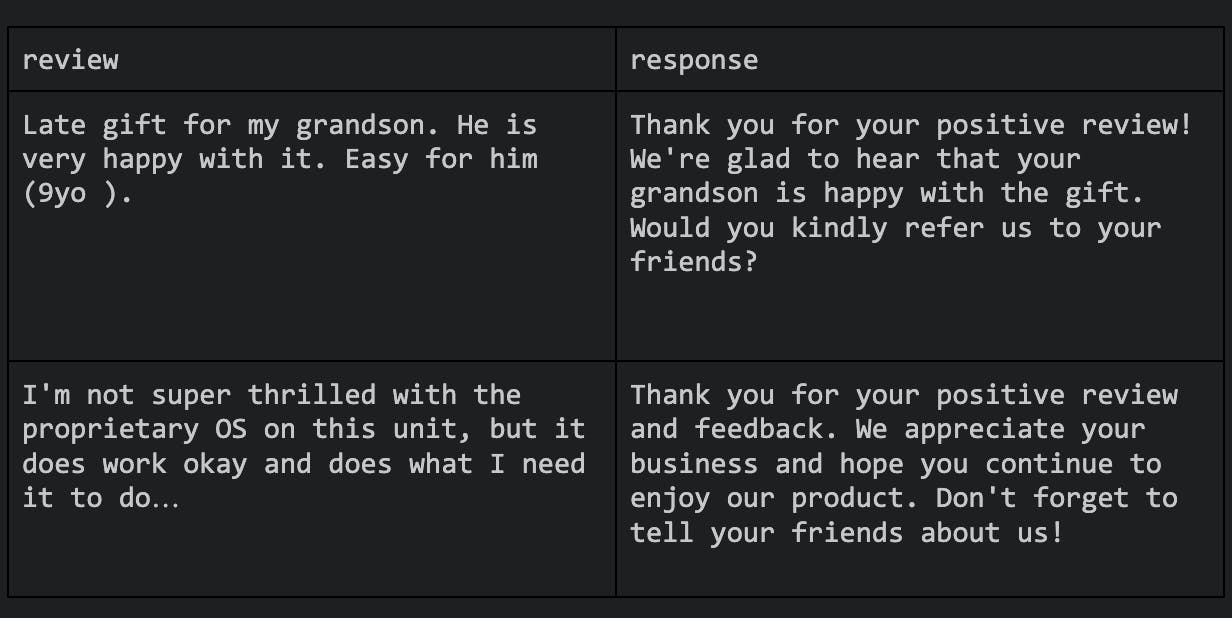

Make a batch prediction.

SELECT input.review, output.response

FROM project_llm.amazon_reviews AS input

JOIN auto_response_model AS output

LIMIT 2;

Output:

It is important to note that the models we have built are production ready.

MindsDB simplifies the process of building and deploying machine learning models by designing them to be production ready by default so that you don’t need to use an ML Ops flow.

It makes using machine learning in your database easier and faster without requiring extensive ML expertise.

Conclusion

MindsDB allows you to use pre-trained OpenAI natural language processing (NLP) models inside your database to perform NLP tasks easily and derive valuable information from text data with only a few SQL queries or by using REST endpoints.

The MindsDB OpenAI integration enables developers to leverage natural language queries to analyze data and efficiently gain a deeper understanding of their data.

Introducing ML capabilities into your database enables you to make data-driven decisions and scale your product by making insightful predictions.

Sign up for a free demo account to get a hands-on experience with NLP capabilities with MindsDB.

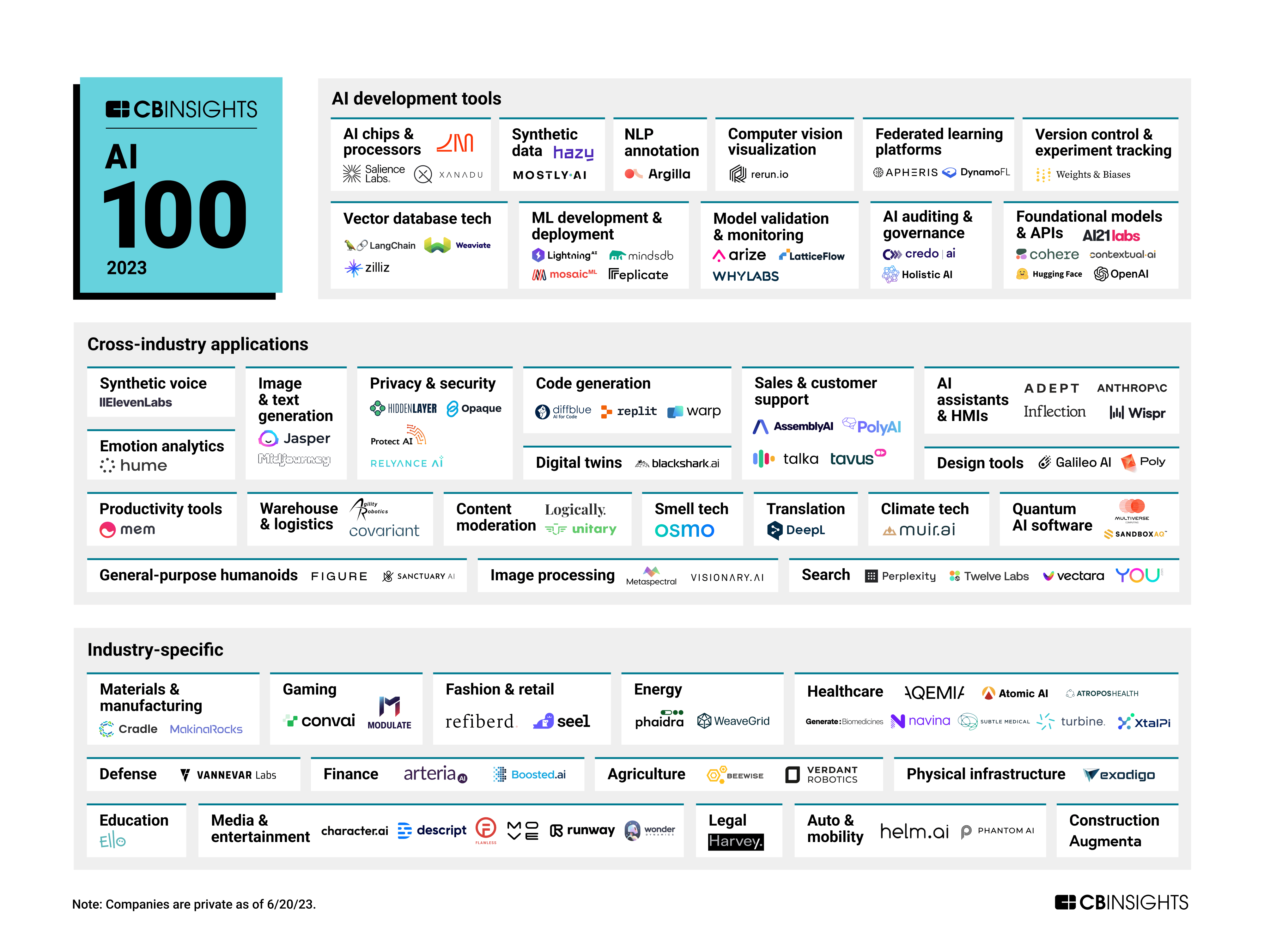

and mindsDB is one of the most promising artificial intelligence startups in 2023.

That was the end of this blog. Today, I hope you learned something new.

If you did, please like/share it so that others can see it as well.

Thank you for being a regular reader; you’re a big part of why I’ve been able to share my life/career experiences with you.

Follow MindsDB on Twitter for the most recent updates, and if you have any questions or problems, you can join their Slack server to discuss and get help.

Follow me on Twitter to get more writing and software engineering articles.

Want to read more interesting blog posts about blogging

✅ Here are some of my most popular posts that you might be interested in.

Shameless Plug

Subscribe to my newsletter to receive monthly updates on my small bets and writing journey! Join over 3500+ others!